Get your team up to speed with our Official Workshops, bringing certified training to your studio or educational institution. The standard curriculum can be fully customized to meet your specific requirements.

Overview

On Location

We bring certified training to your studio or educational institution

Customize

Choose the focus of your Workshop, from introductory to advanced topics

Scheduling

Workshops run from three to five days, and can be arranged at your preference

Certification

All participants who finish the Workshop will receive an Official Certificate of Completion

Onsite Training

We will send certified trainers to your studio or institution to provide training on site, for groups up to 15 people. These exclusive workshops are provided at your location, and at your convenience for minimal disruption.

Customization

You may customize your Workshop to meet the requirements of your studio. For instance, choose to focus on Katana, Houdini, or Maya integration. Select introductory or advanced subjects. Cover technical topics such as shader writing, or specific workflows like using Substance Painter with RenderMan.

Customization

You may customize your Workshop to meet the requirements of your studio. For instance, choose to focus on Katana, Houdini, or Maya integration. Select introductory or advanced subjects. Cover technical topics such as shader writing, or specific workflows like using Substance Painter with RenderMan.

-Ernst Janssen Groesbeek, 9to3 Animation

Standard Curriculum

Workshops can be scheduled from three to five days, with the standard curriculum covering the following:

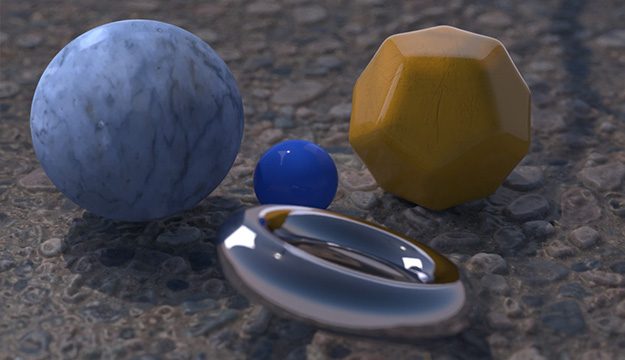

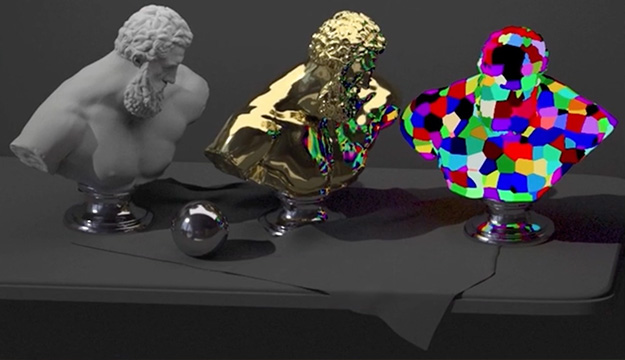

Look Development

|

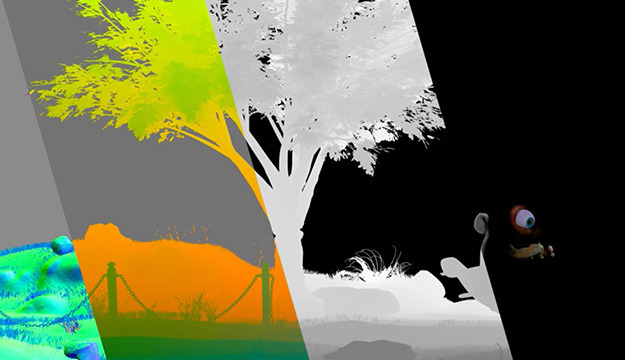

Lighting

|

Integrators

|

Tractor and Farm Rendering

|

Additional Topics

|

|

Look Development

|

Lighting

|

Integrators

|

Tractor and Farm Rendering

|

Additional Topics

|

Cost & Scheduling

We are happy to work with you to find the best Workshop solution for your studio or institution. Please write with an explanation of your interest and preferred timing, and we will get back with you with a proposal and quote.